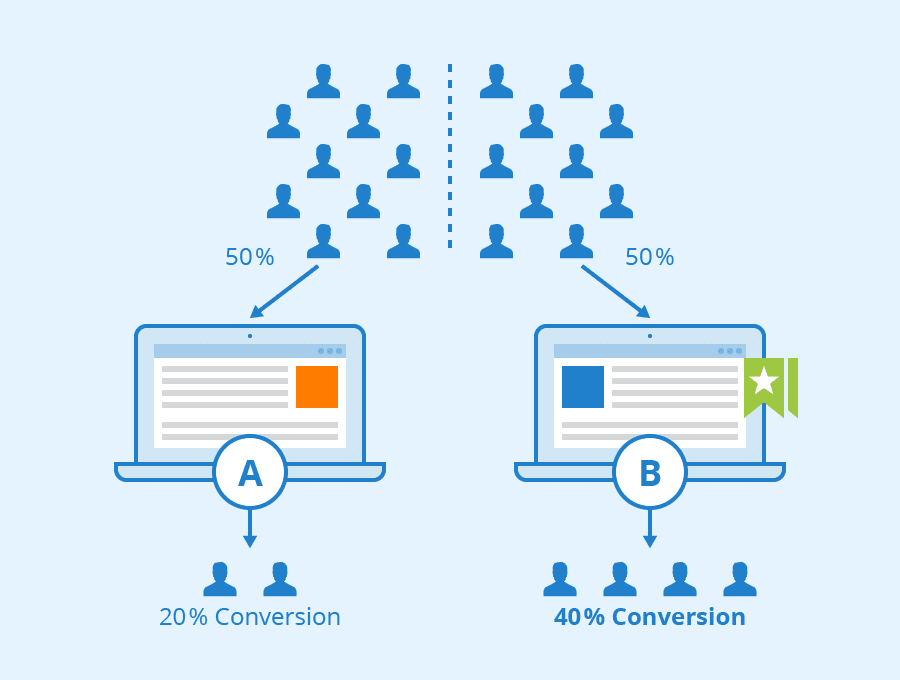

A properly designed A/B test has these essential elements:

- A clear, measurable goal (conversion rate, click-through rate, revenue)

- One isolated variable being tested

- Random audience assignment

- Sufficient sample size to detect meaningful differences

- Appropriate duration to account for time-based factors

Without these elements, your test results may lead to incorrect conclusions and costly mistakes.

Common A/B testing mistakes

1. Testing too many variables at once

The problem: When you change multiple elements simultaneously, you can’t identify which change caused the effect.

The solution: Test one variable at a time. If you need to evaluate multiple changes, use multivariate testing with proper statistical control.

2. Ending tests too early

The problem: Stopping as soon as you see “significant” results often leads to false positives due to random fluctuations.

The solution: Determine sample size in advance using power analysis and commit to the full test duration regardless of early results.

3. Ignoring statistical significance

The problem: Acting on results that aren’t statistically significant wastes resources on changes that may have happened by chance.

The solution: Use statistical significance calculators and aim for a p-value of 0.05 or lower (95% confidence).

4. Overlooking practical significance

The problem: Statistical significance doesn’t mean business impact. A 0.1% improvement may be statistically significant but not worth implementing.

The solution: Define a minimum effect size that matters for your business before running the test.

Step-by-step guide to effective A/B testing

1. Define your hypothesis

Start with a clear, testable hypothesis:

“Changing [specific element] will [increase/decrease] [specific metric] by [estimated amount] because [rational explanation].”

Example: “Changing our CTA button from green to orange will increase click-through rates by at least 5% because orange creates more visual contrast on our page.”

2. Calculate required sample size

Use an A/B test calculator to determine how many visitors you need based on:

- Your baseline conversion rate

- The minimum improvement you care about detecting

- Your desired confidence level (typically 95%)

- Statistical power (typically 80%)

For example, with a 5% baseline conversion rate and wanting to detect a 20% relative improvement, you need approximately 6,000 visitors per variation.

3. Set up proper tracking

Ensure your analytics properly track:

- Primary conversion goal

- Secondary metrics that might be affected

- Segment data for different user groups

Tools like Google Optimize, Optimizely, or VWO can help implement proper tracking.

4. Run the test for the full duration

Short tests can produce misleading results due to:

- Day-of-week effects

- Temporary external factors

- Random fluctuations

Run most tests for at least one full business cycle (often 2-4 weeks) even if results appear significant earlier.

5. Analyse results properly

Don’t just look at conversion rates. Consider:

- Statistical significance (p-value)

- Confidence intervals around your estimate

- Results across different segments

- Secondary metrics and potential negative impacts

Example: Your test shows a 10% increase in click-through rate but a 5% decrease in actual purchases – not a true win.

A/B testing tools comparison

| Tool | Best for | Limitations |

|---|---|---|

| Google Optimize | Beginners, tight budgets, Google Analytics integration | Limited test variations, less advanced targeting |

| Optimizely | Enterprise, complex tests, sophisticated targeting | Higher cost, steep learning curve |

| VWO | Mid-sized companies, good balance of features and usability | Less powerful than enterprise solutions |

| AB Tasty | Marketing teams, personalisation features | Less developer-friendly |

When not to A/B test

A/B testing isn’t always the right approach:

- Low traffic sites: If getting sufficient sample size takes months

- Major redesigns: Too many variables change simultaneously

- Brand new features: No baseline exists for comparison

- Legal or compliance changes: Must be implemented regardless of performance

In these cases, consider usability testing, focus groups, or pre/post analysis instead.

Advanced A/B testing approaches

Once you master the basics, consider these advanced techniques:

- Bandit algorithms: Dynamically adjust traffic allocation to favor better-performing variations

- Sequential testing: Continuously monitor results with adjusted statistical thresholds

- Personalisation testing: Test different variations for different audience segments

Leave a Reply